In this week’s column we will dig deeper, and figure out exactly how many people cheated on predictions, and whether this cheating had any impact on the results. Additional, we will revisit five cases recently decided: Johnson v. US, Bloate v. US , Reed Elsevier v. Muchnick, Milavetz, and Mac’s Shell Service v. Shell Oil.

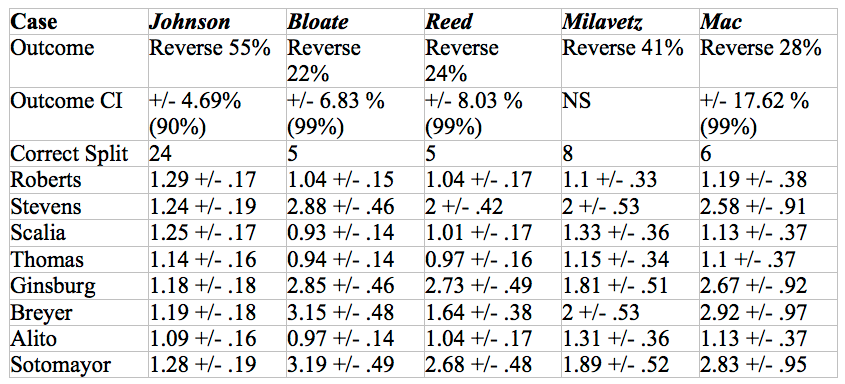

For each case, we have recorded our outcome statistics and SMRs (standard mortality ratio). The SMR provides a method to test whether or not users perceive the Court as dominated by conservative ideology.

In Johnson, the Court in a 7-2 decision reversed the 11th Circuits decision to apply federal law to determine whether someone committed a “violent felony” for the purpose of the Armed Career Criminal Act. With a total of 304 predictions, 55% of users predicted that the Court would reverse the decision, at a 90% confidence level. 24 users predicted the correct split, while 9 users correctly predicted that Thomas and Alito would dissent. Based on the SMRs, Alito had the lowest SMR, followed by Thomas with the second lowest, supporting the conclusion that Thomas and Alito would be the minority. Additionally, both Thomas’ and Alito’s SMRs were not significantly different from 1, indicating that Roberts and Scalia “defected” from the “conservative” view. Ginsburg was the only liberal justice to not have a SMR significantly different from 1, although Breyer was close. Overall, the results reflected the formation of a large majority with 2 conscientious objections.

In Bloate, the Court issued another 7-2 decision, reversing an 8th Circuit decision holding that the delay resulting from pretrial motions was sufficiently related to an on-going trial to be excluded the time in which a trial must commence under the Speedy Trials Act. Out of 241 votes, only 22% of users predicted a reversal, with a confidence level of 99%. Only 5 users got the correct disposition and split, but no users guessed which Justices would be in the minority correctly. The SMRs indicate a very strong tendency for a unanimous decision. However, this finding is problematic because the most often predicted disposition was an affirmation. All of the conservative Justices had SMRs not significantly above 1, while the liberal Justices had extremely high SMRs. These results indicate that there was no clear conservative vs. liberal position, or that the decision was made on a basis beyond ideology. Given that Breyer and Alito were the minority, the final outcome supports this proposition.

For more anlaysis of the remainder of the cases, and an investigation on FantasySCOTUS cheating, read on.

In Reed, the Court voted in a fragmented opinion to reverse a 2nd Circuit decision holding that federal law did not grant jurisdiction to certify a class including the holders of unregistered copyrights. Out of 187 predictions, 24% predicted that the Court would reverse the decisions, once again at a 99% confidence level. 5 users predicted the overall split, but only 1 user predicted that Sotomayor would not be voting. The SMRs once again show a strong tendency towards a unanimous majority, and minus Sotomayor, all Justices did concur in the result. As for Sotomayor, her SMR was the second highest and significantly above 1. Given that the case came from the 2nd Circuit, it was plausible that she would not participate in the decision, which indicates that she should have a much lower SMR than the other Justices. Due to difference between the observed data and the actual outcome, the SMRs suggest that lower court proceedings take less priority than ideology, the briefs, or the legal question in the case when users make predictions.

In Milavetz, the Court issued a unanimous decision reversing the 8th Circuit’s decision that an attorney who provides bankruptcy assistance for valuable consideration is considered a “debt relief agency” under the Bankruptcy Abuse Prevention and Consumer Protection Act. Out of only 66 total predictions, 41% predicted a reversal, but not at a statistically significant level. 8 users did correctly guess the correct split. The conservative Justices had SMRs not significantly above 1, while the liberal Justices had them significantly above. This indicates that the unanimous decision was likely, but the wrong disposition is somewhat countered by the lack of statistical significance given the users predictions. As part of a trend however, Milavetz serves as another data point where the tendency for a majority was correct, but the direction of that majority was wrong.

In Mac’s Shell Service Inc., the Court reversed a 1st Circuit decision holding that the Petroleum Marketing Practices Act did not support a claim for constructive nonrenewal on behalf of a service station against an oil distributor. With only a total of 43 predictions, 28% predicted the reversal at a 99% confidence level. Additionally, 6 users predicted the correct split, constituting half of the people who predicted the reversal. The SMRs once again support a conclusion of a broad majority although the overall disposition was incorrect again.

Given this case and the three discussed before it, it seems that while users are good at predicting the tendency for a majority to form, they had the wrong disposition. One common thread of all five cases is that they involve definitional and procedural portions of federal statutes. Although the majority of users predicted the correct disposition in Johnson, the high number of predictions, users who predicted the correct split, and users who predicted the minority indicate a heightened interest that might have caused users to do more research about the case. When combined with the lack of predictions about Sotomayor’s recusal in Reed, many predictions may be based on first impressions tempered by oral arguments and overall speculation and interest in individual cases.

After two users admitted cheating a few weeks ago, we decided to do some digging, and figure out if anyone took advantage of a loophole in the scoring system. The loophole is simple to exploit:

When designing the system, I decided to allow people to make predictions up until the moment a case is decided by the Supreme Court. On days when opinions are handed down, I lock down the voting once I see that the Court has issued an opinion for a specific case. On Wednesday, the Supreme Court announced Maryland v. Shatzer at 10:00 a.m. I did not lock down the votes until around 11:30 a.m. In this period, several members changed their votes to get more points.

Our analysis found that out of over 8,000 predictions, 651 predictions were made the same day as decisions were handed down. These comprise less than 10% of our total predictions, so closing down predictions on decision days would not disrupt the majority of predictions. Out of those 651 predictions made on decision days, only 23 predictions were made or altered on the same as the case was decided.

The 23 predictions came from 16 users, with 3 users making suspect predictions for 3 cases. As for the cases, Maryland v. Shatzer attracted the most with 6 suspect votes, with Smith v. Spisak and Mohawk v. Carpenter tied at 4. We will reach out to these suspect votes to ascertain whether the change was an honest mistake, or an intentional attempt to cheat.

Overall, these cases attracted significant numbers of predictions, minimizing the overall effect of the problem predictions on our statistics. These numbers indicate that cheating is an isolated event, and in most cases, could be attributed to honest mistakes or system issues. Fortunately, my faith in humanity is not rocked. But next season, I will impose some significant controls to prevent this cheating.

Many thanks to Corey Carpenter for his exemplar assistance with this post.